Variational Autoencoders

Definition: Variational Autoencoders (VAEs) introduce a probabilistic approach to learning latent data representations. They consist of an encoder, which maps input data to a latent space, and a decoder, which reconstructs data from the latent space.

Latent Space Representation: VAEs' key innovation is that they model the latent space using probability distributions, allowing for new data by sampling from these distributions.

Applications: VAEs are used in image generation, data compression, and anomaly detection. They are instrumental in generating data that follows a specific distribution.

VAEs are generative models that combine the strengths of autoencoders with probabilistic modelling. Introduced by Kingma and Welling in 2013, VAEs are designed to learn latent representations of data in a way that enables the generation of new, similar data points. Unlike traditional autoencoders, which focus on reconstruction accuracy, VAEs aim to model the underlying data distribution, making them powerful tools for generative tasks.

Architecture of VAEs

Encoder

Function: The encoder maps input data to a latent space represented by latent variables. It outputs the parameters of a probability distribution, typically a Gaussian distribution, rather than a single deterministic point.

Output: The encoder provides the mean and standard deviation of the latent variables, defining the distribution from which the latent representation is sampled.

Latent Space

Definition: The latent space is a lower-dimensional representation of the input data, capturing the essential features while discarding noise and redundancy.

Sampling: A key innovation in VAEs is the reparameterisation trick, which allows for backpropagation through stochastic sampling. This involves sampling from a standard normal distribution and scaling by the encoder's output.

Decoder

Function: The decoder maps the latent variables back to the original data space, reconstructing the input data from its latent representation.

Output: The decoder aims to produce data that resembles the input, allowing the VAE to generate new data points by sampling from the latent space and decoding.

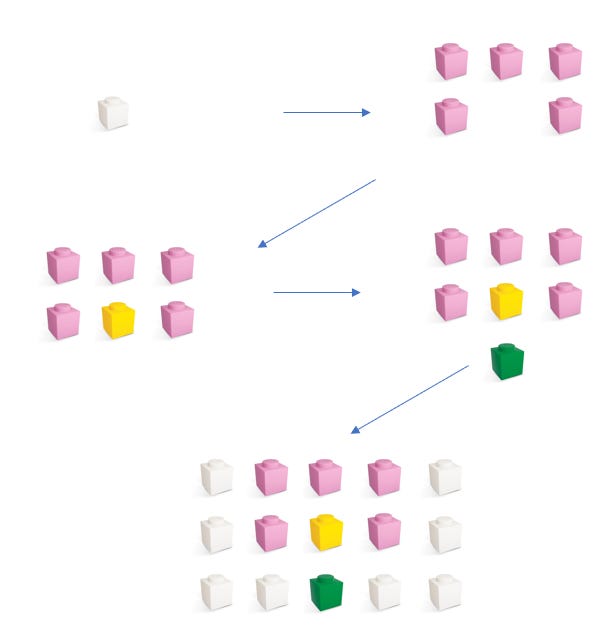

Figure – VAE effect of image decompression

Training VAEs

Loss Function

Reconstruction Loss: Measures how accurately the decoder reconstructs the input data from the latent representation. Typically, mean squared error (MSE) or binary cross-entropy is used.

KL Divergence: The Kullback-Leibler (KL) divergence term ensures that the learned latent distribution is close to a prior distribution (usually a standard normal distribution). This regularisation term prevents overfitting and encourages smooth latent spaces.

Optimisation

Objective: The total loss combines the reconstruction loss and the KL divergence. The VAE aims to minimise this combined loss during training.

Backpropagation: The reparameterisation trick allows for efficient backpropagation through stochastic sampling, enabling gradient-based optimisation techniques.

VAEs are powerful generative models that combine autoencoders' strengths with probabilistic modelling. By learning latent representations of data, VAEs enable the generation of new data points, data compression, and anomaly detection, among other applications. Understanding the architecture, training process, and challenges of VAEs provides a solid foundation for exploring their potential in various domains. In the next section, we will delve into Transformer models, another crucial type of generative AI model, examining their architectures, functionalities, and use cases.

Learn more about Generative AI Models, mainly Challenges and Future Directions for each main model, in our article:

https://buildingcreativemachines.substack.com/p/generative-ai-models-challenges-and