Understanding Multimodal LLMs: An Overview

Multimodal LLMs (Large Language Models) are AI models designed to process and understand multiple types of input modalities such as text, images, audio, and even video. This capability allows them to perform a wide range of tasks that combine different forms of data. The recent surge in AI research has spurred advancements in multimodal models, pushing beyond the traditional text-only scope of LLMs.

Core Techniques:

Unified Embedding Decoder Architecture (Method A):

This method integrates images and text by converting image inputs into tokens with matching embedding dimensions to text tokens, allowing them to be concatenated and processed by a standard LLM architecture. The process typically involves:

Image tokenization: Dividing an image into patches.

Embedding projection: Transforming these patches into vectors compatible with the LLM.

This method maintains simplicity and leverages existing LLM frameworks, exemplified by models like Fuyu that learn image embeddings directly through projection layers.

Cross-Modality Attention Architecture (Method B):

Here, images and text embeddings are combined within the LLM's multi-head attention layers. This allows the model to selectively focus on relevant parts of each modality during training or inference.

This approach often relies on pre-trained vision models like CLIP for initial image processing, with fine-tuning focused on enhancing cross-attention without significantly modifying the base LLM.

Image source: A3 Logics

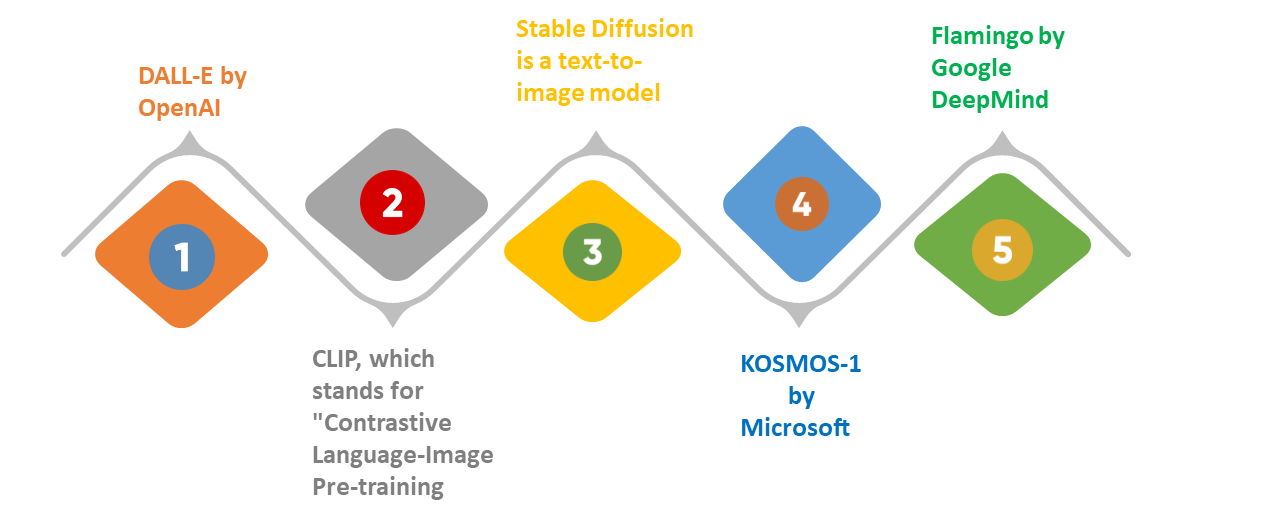

Recent Innovations

Llama 3.2 by Meta AI: One of the standout examples in recent developments. The Llama 3.2 models—available in 11B and 90B parameter versions—utilize a cross-attention approach for multimodal tasks. Key insights include:

The use of an in-house trained vision transformer (ViT-H/14) for image embedding showcases an independent pretraining phase before integration.

This is a unique strategy in which only the image encoder undergoes updates, preserving the pre-trained LLM's language capabilities for text-only tasks.

Adaptation layers, inserted in every fourth transformer block, effectively manage multimodal interactions without altering the LLM's fundamental structure.

Practical Applications:

Image captioning: Generating text descriptions for given images.

Document understanding: Extracting data from complex formats like tables in PDFs and converting it into structured outputs (e.g., LaTeX).

Multimodal dialogue systems: Enhancing AI's ability to respond contextually to diverse inputs, such as understanding questions based on image prompts or instructions.

Image source: A3 Logics

Training Considerations

Training Phases:

Pre/training: Initiated with a pre-trained text-only LLM to incorporate initial knowledge.

Multimodal finetuning: Fine-tuning the model to align its responses across different modalities, often freezing the image encoder initially to focus on training projection or adapter layers.

Challenges and Benefits:

Method A is easier to implement with minimal changes to LLM architecture, while Method B optimizes computational resources by incorporating cross-modal interactions within attention layers.

The choice between these methods depends on the trade-offs between implementation complexity, parameter overhead, and computational efficiency.

Multimodal LLMs represent a significant leap in AI, enabling a more prosperous, integrated approach to understanding complex data. The evolution from simple LLMs to sophisticated models like Llama 3.2 highlights a trajectory focused on enhancing AI's versatility and practical applications. Future developments will likely refine cross-modality efficiencies, expand modality types, and improve training methodologies to balance performance with scalability.